Building thy own cloud 🏴☠️

In this little blog post, I’d like to reflect on the last few years and how I built my own cloud service. Today, it serves about a dozen users with services like file storage and sharing, streaming, a Stable Diffusion instance, a JupyterLab and a VPN. I also use it as a homelab.

For security reasons, I won’t get into too much details about the hardware and software. Hope you find this interesting anyway!

The origins

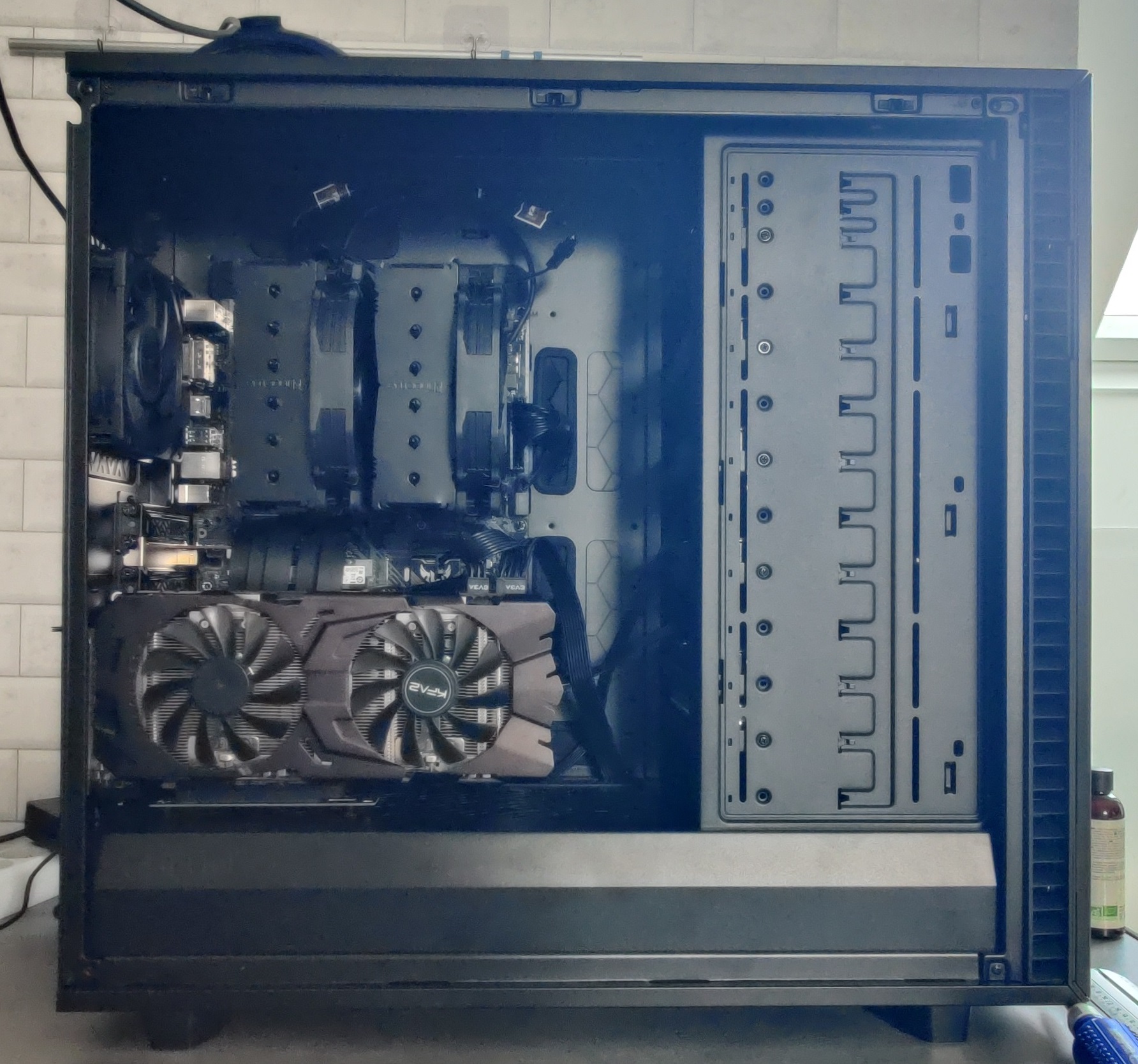

The idea of a server started when I needed to upgrade my first PC, which I got in 2017: a pretty high-end computer at the time, packing a i5-6600K, a GTX 1070 and a whopping 16 GB of RAM. First thing I changed was the processor, in 2019: I opted for an AMD 3900X, which meant I had to also change the motherboard.

Happy with this new investment and the power boost it gave my build, that also meant I had some components on my hands that I didn’t need.

I could sell them, but at a fraction of their cost. So instead, borrowing some RAM and a power supply from the leftovers of my old mining rig, I assembled another PC, which certainly didn’t pack a punch, but was enough to experiment a bit. I could leave scripts running for days on end, and play around with linux and some technologies I had an eye on for a while, such as Docker.

This newfound knowledge enabled me to understand, design and setup an infrastructure: I opted for Nextcloud, a self-hosted cloud service. I also used fail2ban for bruteforce protection, and SWAG as the reverse-proxy and SSL certificate manager.

For the next years, when I upgraded a component in my main PC, I just put it back into the server, and like the ship of Theseus, one became the other.

I was always very cautious about data integrity, with a pretty strong backup plan: the data was stored on two 2 TB SSDs, mirrored with RAID-1, with an off-site daily backup, and a monthly cold storage backup stored off-site. Solid!

However, despite this, it was difficult to rollback files and settings, and I even lost a few files due to bit rot (which RAID1 doesn’t protect against). That’s when I really started to look into cleaner and more robust solutions. In 2023, I bought some serious hardware and revamped my infrastructure.

The revamp

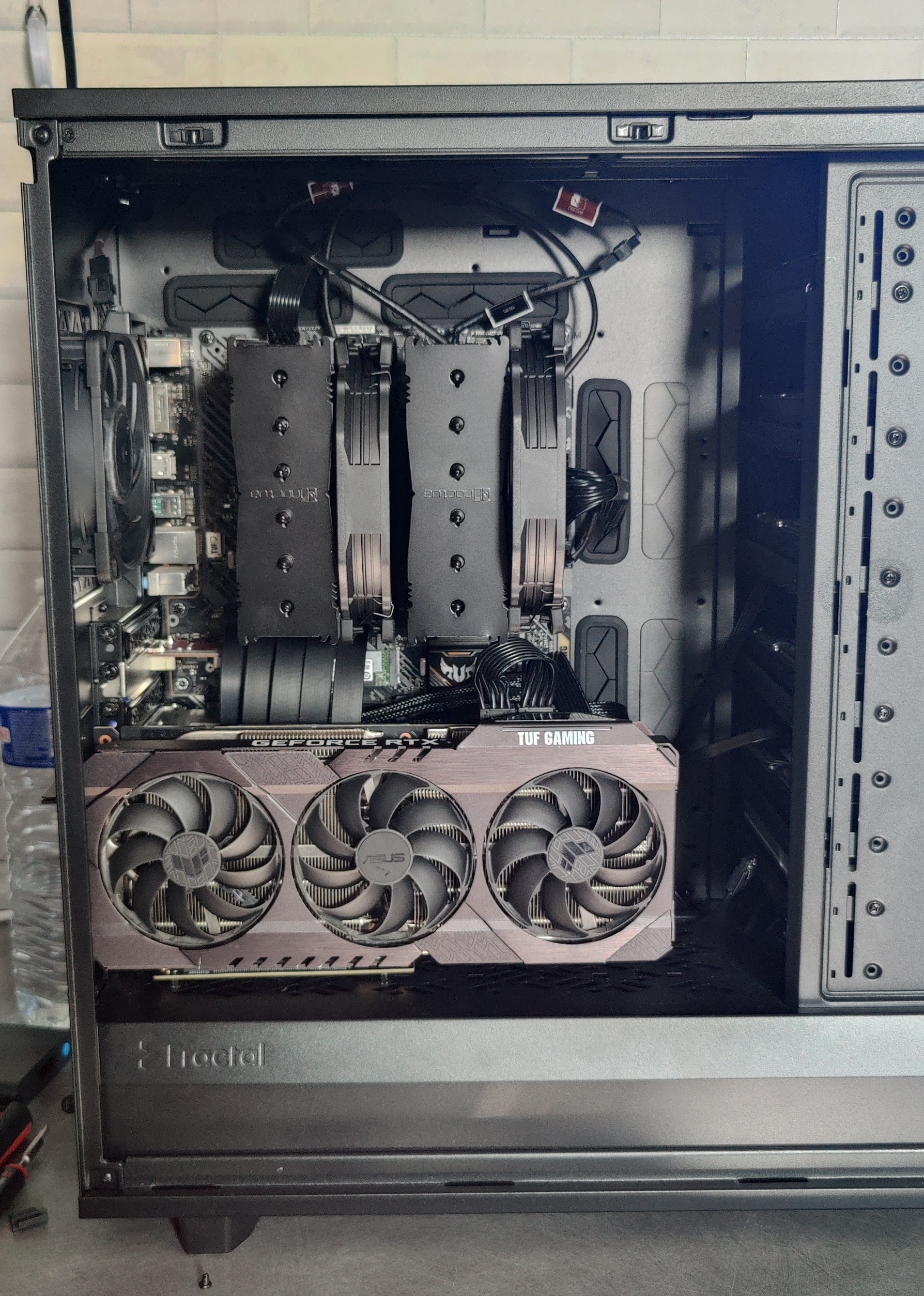

Now we were talking: instead of 2 TB of usable RAID-1 storage, I now have 32 TB of raw storage, mirrored and stripped with ZFS (for a usable space of 16TB). Zooming in on that, I chose to invest in 4 8TB Seagate Exos: enterprise-grade HDDs designed to run non-stop. I also have a 18TB one, which is for raw, unsaved storage. The system is stored on a Sabrent top-of-the-line 2TB NVME, and another one is installed and used as an (arguably overkill) L2ARC cache.

I also upgraded the GPU from the original 1070 8GB to a beafy 3090 24GB (more VRAM is better for AI stuff) for which I have applied brand new thermic paste. All that stuff got a high-quality air cooling system based on Noctua’s NF-A12x25 for the case – which is a gigantic Fractal Design Define 7 XL – and a Noctua NH-D15 for the CPU. It gets cool in there 😎

In terms of software, the services are pretty much the same from the inception: a good ol’ Debian for the OS, Docker to hold it all together, Nextcloud for the files – fine-tuned for my users’ needs –, a reverse-proxy to serve my apps, secured with a bunch of middlewares and extra things for maximum security.

The future

Currently, the motherboard isn’t very good, it’s an ASUS TUF gaming motherboard, which doesn’t have enough M.2 ports nor SATA ports (so I use a HBA card), and would need to be upgraded anyway since I’d like to migrate over to Intel’s latest i9s (probably the 13900K). There are some nice (but hella expensive) server-grade motherboards available out there, especially SuperMicro’s (e.g. the x13sae), which meet the requirements. That might be what I’m going for.

I’ll also max out the RAM of this new motherboard/CPU pair (probably 128GB) with ECC memory. This ARC space would get rid of the use for L2ARC (for the quantity of data I currently have, anyway), which is nice.

Finally, in the far-far-away future, I’d like to upgrade my server with bleeding-edge GPU accelerators (i.e. A100, H100), but huh… 15K$ a module? No thanks.

Thanks for reading! Hope to see you around for an update on the progress 😉